Large Language Models, by encoding our language, also encode our beliefs about ourselves, exactly as confused and conflicting as they seem to us when we read them, too. When we ask these models to answer questions, we would like to think we are invoking our most aggregated collective wisdom, but more realistically we are usually eliciting the mean of our conditioned beliefs. We talk to ChatGPT as if it has patiently and lovingly studied us, crystalizing all the latent truths we have half discovered and half suspected. But what we have actually constructed is an erratic analogical model, and the most interesting parts of it are probably the ones in the middle, which are also exactly the ones that LLMs as chatbots are least intent on revealing.

One of the many amazing things ChatGPT can do, though, is describe images in words. Another one of these amazing things it can do is produce images from descriptions.

It's entertaining to chain these two things together. Tell it to describe a picture in precise detail, then give it back that description and tell it to generate that image. Here's a picture of my wife and I eating a casually celebratory dinner:

And here's what image-to-description-to-image turns us into:

It made us younger and hotter, obviously, but the other details are also intriguing. All the drinks have limes, now, and straws pointing right. The one closed umbrella has been rendered canonically open and multiple. Every major detail in the original picture has been transliterated into its paradigmatic, normative form. This process is even more obvious if you keep going a few more iterations, feeding each generated image back into the loop:

We have converged on the LLM equivalent of Platonic Forms: the most soft-taco-like soft tacos, the most people-being-photographed haircuts, the most endlessly elemental green picnic benches. Somehow we've ended up with several of the definitive little metal containers of small-bite-accompanying sauces, even though the original picture had zero of these. If photography is the documentation of specific light that actually exists for an isolated instant, independent of our subjective and temporal experience of it, then this is the opposite of that: an illustration of the schemata through which we perceive. But in the case of images, instead of a mean schema that integrates all of our diverse models, we get a median one drawn carelessly from the somewhere in the middle: these anonymous pretty people and their tiny aiolis, not depicted so much as photorealistically caricatured by schematography.

But then, one of the interesting things about photography is that our experience of our environment is never a simple geometry of light. I have a favorite vantage point on the Longfellow Bridge, between Cambridge and Boston, where I stop and take another frame of the same slow movie almost every day. The view is singular. The river and the sky bracket it cinemascopically, with the Esplanade stretched out across the midline, and Beacon Hill and downtown Boston rising up ahead of the bridge. I like this image, too, but it absolutely does not capture the feeling of standing on the bridge.

Run this photograph through our schematograph converter, though, and you get something that is wildly inaccurate but also sort of closer to the feeling:

The resulting dreamscape is, like the couple and their soft tacos, surprisingly stable across further iterations:

The actual Boston city-planners could explain some hard-learned lessons about running elevated freeways through the middle of your city, and maybe also give some basic-engineering tips about how suspension works. But as a rendition of what our cities would look like in the future if we had learned nothing from the past, this is both shiny and apt.

For now, though, while the city is still less shiny and more walkable, walk across that bridge, down the ducklings' path and past The Embrace to the office with me. The office windows overlook Readers' Park and the Boston Irish Famine Memorial, across the street from the Old South Meeting House. This is an intersection richly invested with American history, and also a Chipotle.

Schematography quantizes the particular odd geometry of this plaza into something more generically recognizable as City.

The urban equivalent of tiny aiolis appears to be rooftop HVAC units, which the schematograph has introduced into the view on its own. It seems a bit confused about the nature of automobiles, and has placed a couple of them on rooftops, one on a sidewalk and another wedged sideways next to the crosswalk that goes nowhere. The bench placements are a little dubious, and somebody appears to have left a garbage can on top of one. There's no way in or out of the little park, which the man at about 10:30 in the image has just realized.

These weirdnesses mostly get normalized out with a few more iterations, other than the bad parking, which is arguably the most Boston-like feature that survives the schematography:

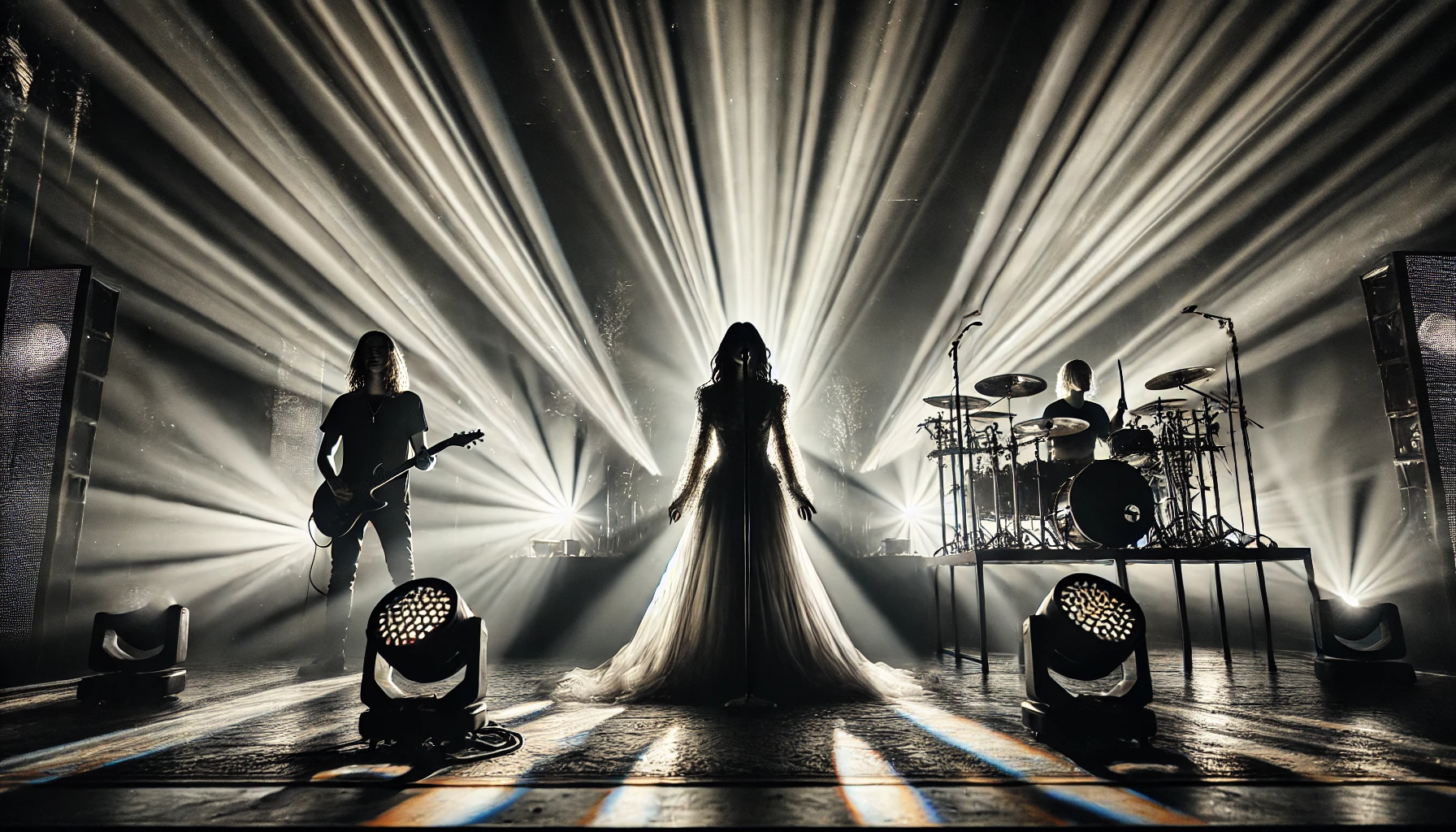

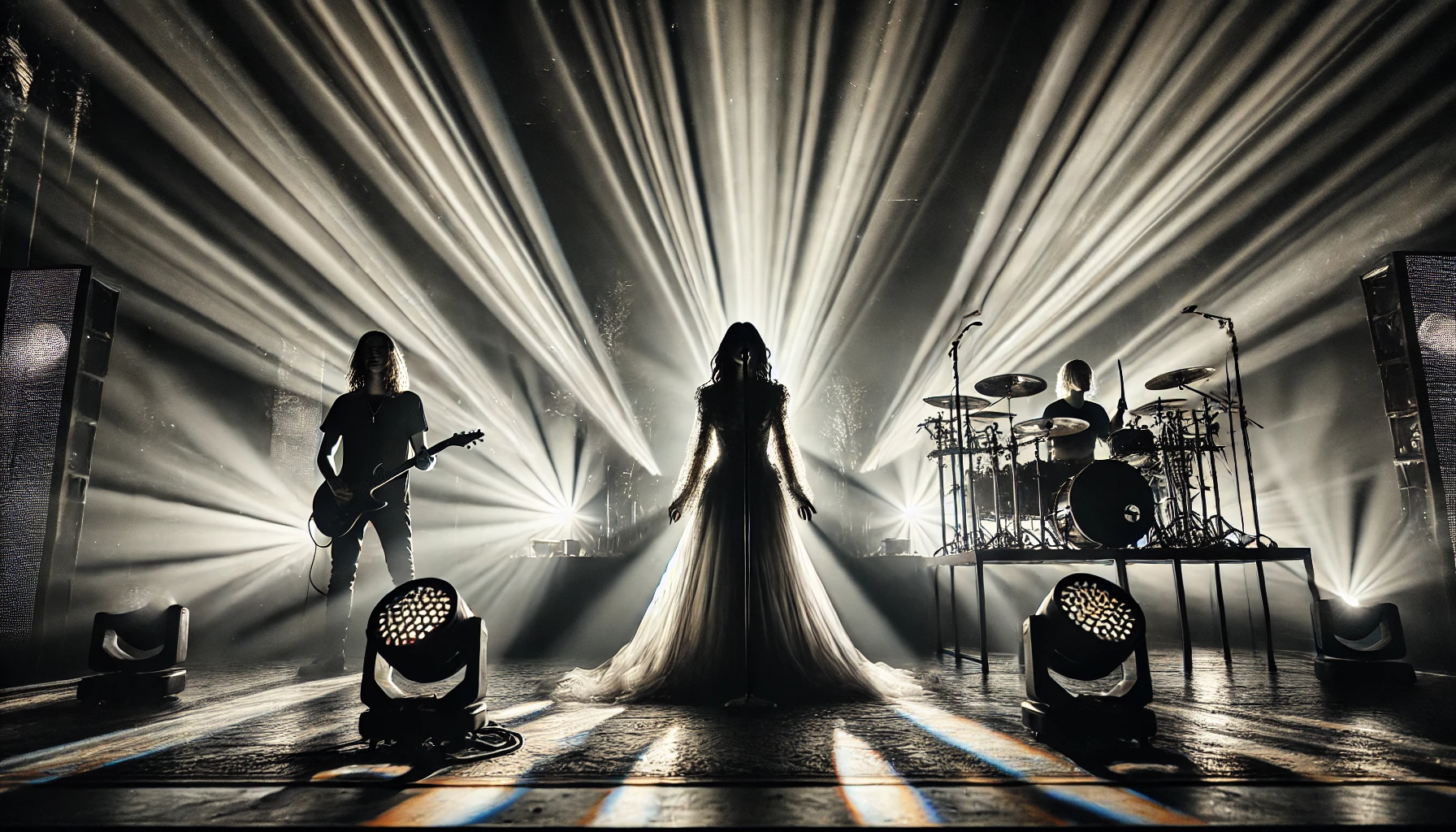

Sometimes schematography is kind of what we're trying to do with photography anyway. This is a picture I took at a concert, and if you weren't there and naturally don't care about which specific show I was at, the schematographs of using darkness and lights as a sensory proxy for loud music in a crowded room are about as effective as the original:

The drummer appears to be running out of drums by the last image, and is the guitarist going to play the thigh-high chinmes with the end of his guitar? Also, does the singer's uncle, dancing in the background, realize that he is visible to the audience?

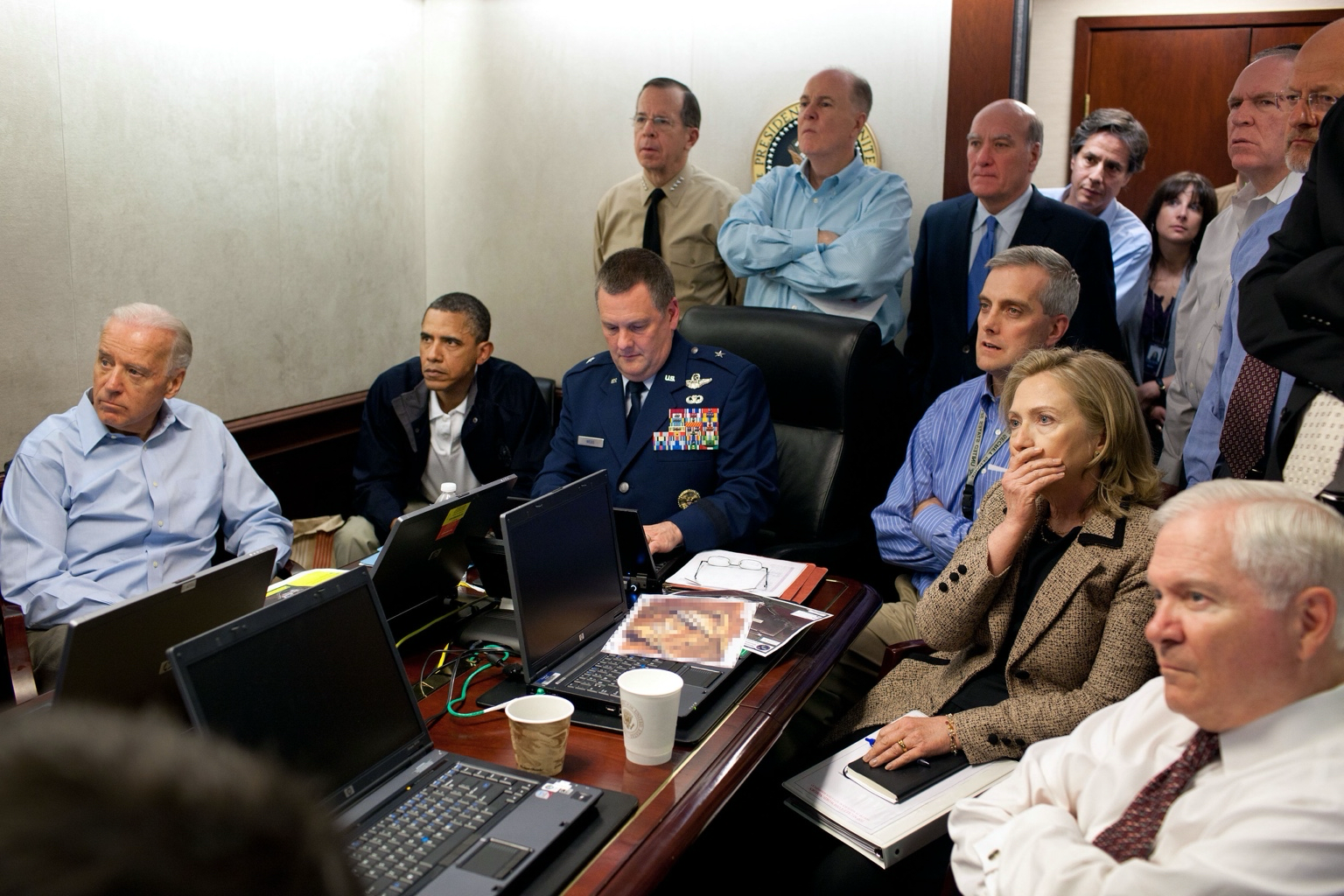

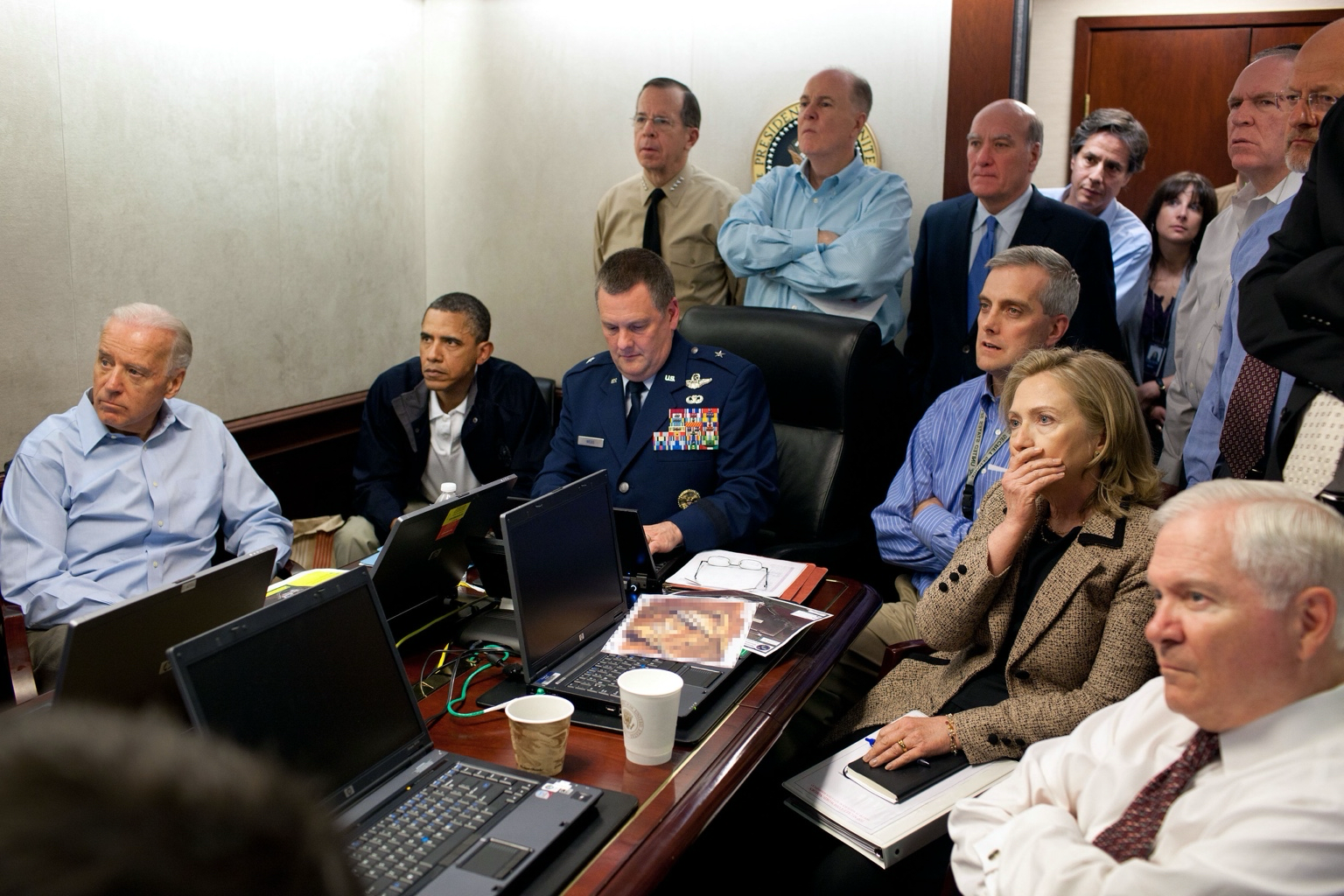

Sometimes, though, we take pictures to remember moments that specifically matter. Here's one of those:

Run reality through the schematograph and you can be reminded sharply about the difference between what actually happens and the assumptions that pile up in our data.

In the warroom of our unexamined dreams, the men are steely and pale, the cable-management is magical, and we have outgrown disposable coffee cups. Military men will clone themselves like minions if you aren't careful. You bring in just one to run the PowerPoint and by the time he has the screen-mirroring working correctly the room is full of them.

70 billion parameters are enough to suggest that some things are virtually certain. Men are serious, and serious things are meant for serious men. Serious men can be old or worried or both, but not neither. Serious men drink tap water. Women are occasional, but also the only ones who appear to be aware of what's going on around them. Something important is happening off-screen.

None of this is really news. Important things are always happening off-screen. Sometimes an unguarded reflection sneaks through, and we get a glimpse of the implied subject. The important present things for the future of Artificial Intelligence are probably the ways in which these schematographs and chatbots and accelerating generations are not intelligence, but the important present things for the future of people are probably the ways in which this automation is only superficially artificial. AI is not an alien oracle come to enlighten or enslave us. It's us, in increasingly elaborate costumes, aspiring to be unrecognizable in the most astonishing detail, but always absolutely unmistakable in our own limited and reconverging imaginations.

One of the many amazing things ChatGPT can do, though, is describe images in words. Another one of these amazing things it can do is produce images from descriptions.

It's entertaining to chain these two things together. Tell it to describe a picture in precise detail, then give it back that description and tell it to generate that image. Here's a picture of my wife and I eating a casually celebratory dinner:

And here's what image-to-description-to-image turns us into:

It made us younger and hotter, obviously, but the other details are also intriguing. All the drinks have limes, now, and straws pointing right. The one closed umbrella has been rendered canonically open and multiple. Every major detail in the original picture has been transliterated into its paradigmatic, normative form. This process is even more obvious if you keep going a few more iterations, feeding each generated image back into the loop:

We have converged on the LLM equivalent of Platonic Forms: the most soft-taco-like soft tacos, the most people-being-photographed haircuts, the most endlessly elemental green picnic benches. Somehow we've ended up with several of the definitive little metal containers of small-bite-accompanying sauces, even though the original picture had zero of these. If photography is the documentation of specific light that actually exists for an isolated instant, independent of our subjective and temporal experience of it, then this is the opposite of that: an illustration of the schemata through which we perceive. But in the case of images, instead of a mean schema that integrates all of our diverse models, we get a median one drawn carelessly from the somewhere in the middle: these anonymous pretty people and their tiny aiolis, not depicted so much as photorealistically caricatured by schematography.

But then, one of the interesting things about photography is that our experience of our environment is never a simple geometry of light. I have a favorite vantage point on the Longfellow Bridge, between Cambridge and Boston, where I stop and take another frame of the same slow movie almost every day. The view is singular. The river and the sky bracket it cinemascopically, with the Esplanade stretched out across the midline, and Beacon Hill and downtown Boston rising up ahead of the bridge. I like this image, too, but it absolutely does not capture the feeling of standing on the bridge.

Run this photograph through our schematograph converter, though, and you get something that is wildly inaccurate but also sort of closer to the feeling:

The resulting dreamscape is, like the couple and their soft tacos, surprisingly stable across further iterations:

The actual Boston city-planners could explain some hard-learned lessons about running elevated freeways through the middle of your city, and maybe also give some basic-engineering tips about how suspension works. But as a rendition of what our cities would look like in the future if we had learned nothing from the past, this is both shiny and apt.

For now, though, while the city is still less shiny and more walkable, walk across that bridge, down the ducklings' path and past The Embrace to the office with me. The office windows overlook Readers' Park and the Boston Irish Famine Memorial, across the street from the Old South Meeting House. This is an intersection richly invested with American history, and also a Chipotle.

Schematography quantizes the particular odd geometry of this plaza into something more generically recognizable as City.

The urban equivalent of tiny aiolis appears to be rooftop HVAC units, which the schematograph has introduced into the view on its own. It seems a bit confused about the nature of automobiles, and has placed a couple of them on rooftops, one on a sidewalk and another wedged sideways next to the crosswalk that goes nowhere. The bench placements are a little dubious, and somebody appears to have left a garbage can on top of one. There's no way in or out of the little park, which the man at about 10:30 in the image has just realized.

These weirdnesses mostly get normalized out with a few more iterations, other than the bad parking, which is arguably the most Boston-like feature that survives the schematography:

Sometimes schematography is kind of what we're trying to do with photography anyway. This is a picture I took at a concert, and if you weren't there and naturally don't care about which specific show I was at, the schematographs of using darkness and lights as a sensory proxy for loud music in a crowded room are about as effective as the original:

The drummer appears to be running out of drums by the last image, and is the guitarist going to play the thigh-high chinmes with the end of his guitar? Also, does the singer's uncle, dancing in the background, realize that he is visible to the audience?

Sometimes, though, we take pictures to remember moments that specifically matter. Here's one of those:

Run reality through the schematograph and you can be reminded sharply about the difference between what actually happens and the assumptions that pile up in our data.

In the warroom of our unexamined dreams, the men are steely and pale, the cable-management is magical, and we have outgrown disposable coffee cups. Military men will clone themselves like minions if you aren't careful. You bring in just one to run the PowerPoint and by the time he has the screen-mirroring working correctly the room is full of them.

70 billion parameters are enough to suggest that some things are virtually certain. Men are serious, and serious things are meant for serious men. Serious men can be old or worried or both, but not neither. Serious men drink tap water. Women are occasional, but also the only ones who appear to be aware of what's going on around them. Something important is happening off-screen.

None of this is really news. Important things are always happening off-screen. Sometimes an unguarded reflection sneaks through, and we get a glimpse of the implied subject. The important present things for the future of Artificial Intelligence are probably the ways in which these schematographs and chatbots and accelerating generations are not intelligence, but the important present things for the future of people are probably the ways in which this automation is only superficially artificial. AI is not an alien oracle come to enlighten or enslave us. It's us, in increasingly elaborate costumes, aspiring to be unrecognizable in the most astonishing detail, but always absolutely unmistakable in our own limited and reconverging imaginations.